Posted by Paul Crowley and Eric Biggers, Android Security & Privacy Team

Storage encryption protects your data if your phone falls into someone else's hands. Adiantum is an innovation in cryptography designed to make storage encryption more efficient for devices without cryptographic acceleration, to ensure that

all devices can be encrypted.

Today, Android offers storage encryption using the Advanced Encryption Standard (AES). Most new Android devices have hardware support for AES via the ARMv8 Cryptography Extensions. However, Android runs on a wide range of devices. This includes not just the latest flagship and mid-range phones, but also entry-level

Android Go phones sold primarily in developing countries, along with

smart watches and

TVs. In order to offer low cost options, device manufacturers sometimes use low-end processors such as the ARM Cortex-A7, which does not have hardware support for AES. On these devices, AES is so slow that it would result in a poor user experience; apps would take much longer to launch, and the device would generally feel much slower. So while storage encryption has been

required for most devices since Android 6.0 in 2015, devices with poor AES performance (50 MiB/s and below) are exempt. We've been working to change this because we believe that encryption is for everyone.

In HTTPS encryption, this is a solved problem. The

ChaCha20 stream cipher is much faster than AES when hardware acceleration is unavailable, while also being extremely secure. It is fast because it exclusively relies on operations that all CPUs natively support: additions, rotations, and XORs. For this reason,

in 2014 Google selected ChaCha20 along with the

Poly1305 authenticator, which is also fast in software, for a new TLS cipher suite to secure HTTPS internet connections. ChaCha20-Poly1305 has been standardized as

RFC7539, and it greatly improves HTTPS performance on devices that lack AES instructions.

However, disk and file encryption present a special challenge. Data on storage devices is organized into "sectors" which today are typically 4096 bytes. When the filesystem makes a request to the device to read or write a sector, the encryption layer intercepts that request and converts between plaintext and ciphertext. This means that we must convert between a 4096-byte plaintext and a 4096-byte ciphertext. But to use RFC7539, the ciphertext must be slightly larger than the plaintext; a little space is needed for the cryptographic

nonce and

message integrity information. There are software techniques for finding places to store this extra information, but they reduce efficiency and can impose significant complexity on filesystem design.

Where AES is used, the conventional solution for disk encryption is to use the XTS or CBC-ESSIV modes of operation, which are length-preserving. Currently Android supports AES-128-CBC-ESSIV for full-disk encryption and AES-256-XTS for file-based encryption. However, when AES performance is insufficient there is no widely accepted alternative that has sufficient performance on lower-end ARM processors.

To solve this problem, we have designed a new encryption mode called

Adiantum. Adiantum allows us to use the ChaCha stream cipher in a length-preserving mode, by adapting ideas from AES-based proposals for length-preserving encryption such as

HCTR and

HCH. On ARM Cortex-A7, Adiantum encryption and decryption on 4096-byte sectors is about 10.6 cycles per byte, around 5x faster than AES-256-XTS.

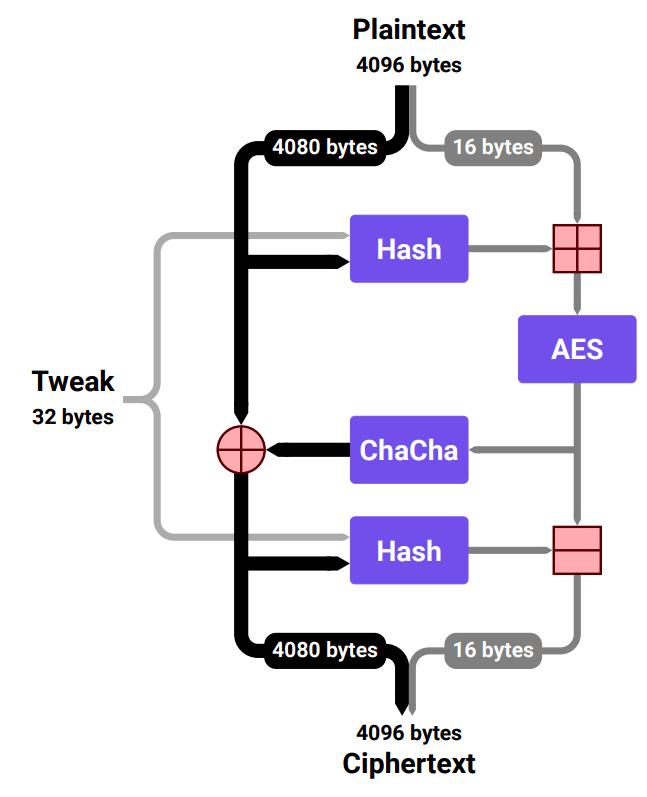

Unlike modes such as XTS or CBC-ESSIV, Adiantum is a true wide-block mode: changing any bit anywhere in the plaintext will unrecognizably change all of the ciphertext, and vice versa. It works by first hashing almost the entire plaintext using a keyed hash based on Poly1305 and another very fast keyed hashing function called NH. We also hash a value called the "tweak" which is used to ensure that different sectors are encrypted differently. This hash is then used to generate a nonce for the ChaCha encryption. After encryption, we hash again, so that we have the same strength in the decryption direction as the encryption direction. This is arranged in a configuration known as a Feistel network, so that we can decrypt what we've encrypted. A single AES-256 invocation on a 16-byte block is also required, but for 4096-byte inputs this part is not performance-critical.

Cryptographic primitives like ChaCha are organized in "rounds", with each round increasing our confidence in security at a cost in speed. To make disk encryption fast enough on the widest range of devices, we've opted to use the 12-round variant of ChaCha rather than the more widely used 20-round variant. Each round vastly increases the difficulty of attack; the 7-round variant was broken in 2008, and though many papers have improved on this attack, no attack on 8 rounds is known today. This ratio of rounds used to rounds broken today is actually better for ChaCha12 than it is for AES-256.

Even though Adiantum is very new, we are in a position to have high confidence in its security. In our paper, we prove that it has good security properties, under the assumption that ChaCha12 and AES-256 are secure. This is standard practice in cryptography; from "primitives" like ChaCha and AES, we build "constructions" like XTS, GCM, or Adiantum. Very often we can offer strong arguments but not a proof that the primitives are secure, while we can prove that if the primitives are secure, the constructions we build from them are too. We don't have to make assumptions about NH or the Poly1305 hash function; these are proven to have the cryptographic property ("ε-almost-∆-universality") we rely on.

Adiantum is named after the genus of the maidenhair fern, which in the Victorian language of flowers (floriography) represents sincerity and discretion.

Additional resources

The full details of our design, and the proof of security, are in our paper

Adiantum: length-preserving encryption for entry-level processors in IACR Transactions on Symmetric Cryptology; this will be presented at the Fast Software Encryption conference (FSE 2019) in March.

Generic and ARM-optimized implementations of Adiantum are available in the

Android common kernels v4.9 and higher, and in the

mainline Linux kernel v5.0 and higher. Reference code, test vectors, and a benchmarking suite are available at

https://github.com/google/adiantum.

Android device manufacturers can

enable Adiantum for either full-disk or file-based encryption on devices with AES performance <= 50 MiB/sec and launching with Android Pie. Where hardware support for AES exists, AES is faster than Adiantum; AES must still be used where its performance is above 50 MiB/s. In Android Q, Adiantum will be part of the Android platform, and we intend to update the

Android Compatibility Definition Document (CDD) to require that all new Android devices be encrypted using one of the allowed encryption algorithms.

Acknowledgements: This post leveraged contributions from Greg Kaiser and Luke Haviland. Adiantum was designed by Paul Crowley and Eric Biggers, implemented in Android by Eric Biggers and Greg Kaiser, and named by Danielle Roberts.